Demystifying the Algorithm: Who Designs your Life?

- 26 juni 2015

I live in Amsterdam. In Amsterdam we bike everywhere. Often, I use Google Maps to see what route I should cycle and how long it will take. Recently I started noticing something strange: Google doesn't seem to like routing me along the canals. Even when I am sure that it is the quickest way to get somewhere.

This talk was delivered at re:publica 15See the website of re:publica for more on May 7th 2015. The audio of the talk has been recordedListen to the recording of this talk.

No Google route through the canals

My sceptical friends were convinced that the rich homeowners who live on the canals must have paid off Google to divert the traffic. I am more inclined to blame it on some glitch. The Google Maps algorithm is probably made to suit the street plan of San Francisco rather than the layout of a World Heritage Site designed in the 17th century.

The Hollywood sign from one of the trails

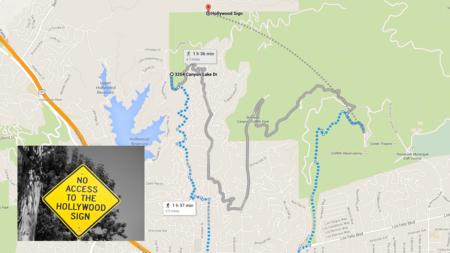

Then I read the story about a small group of LA citizens who have been petitioning the city governments and mapmakers trying to get them to iconic ‘Hollywood Sign’ disappear off the virtual map. They didn’t like how tourists created traffic problems and became a safety hazard by parking their cars in the neighbourhood nearby the sign. So now, when you choose the Hollywood sign as your destination, Google Maps directs you to a viewing platform a few miles away.

Google directing you to a viewing platform

This is an example of Google having a very direct influence on people’s lives. And this influence is ubiquitous. It is now Google who decides on the route I take from the train station to the theatre. By allowing that, I’ve decided to trust the implicit assumptions in Google’s geo team about what I find important.

The incredible amount of human effort that has gone into Google Maps, every design decision, is completely mystified by a sleek and clean interface that we assume to be neutral. When these internet services don’t deliver what we want from them, we usually blame ourselves or “the computer”. Very rarely do we blame the people who made the software.

In 1999 Lawrence Lessig wrote ‘Code and Other Laws of Cyberspace’. In it he explained how ‘code’ can be a regulator of behaviour, just like law. Consider this recent news story about a motorist who gets trapped on a roundabout for 14 hours.

Motorist trapped in traffic circle for 14 hours

Peter Newone said he felt as if a nightmare had just ended. Newone, 53, was driving his newly purchased luxury car when he entered the traffic circle in the city center around 9am yesterday. The car was equipped with the latest safety features, including a new feature called ‘Lane Keeping’. “It just wouldn’t let me get out of the circle,” said Newone. “I was in the inner-most lane, and every time I tried to get out, the steering wheel refused to budge and a voice kept saying over and over, ‘warning, right lane is occupied.’ I was there until 11 at night when it finally let me out,” Newone said from his hospital bed, his voice still shaky. Police say they found Newone collapsed in his car, incoherent. He was taken to the Memorial Hospital for observation and diagnosed with extreme shock and dehydration. He was released early this morning.

Peter Newone’s story isn’t real, but it feels real. It was created by design psychologist Don Norman to illustrate how little attention is paid to human cognitive abilities when designing the things we use everyday. His 2006 fake story was prescient in at least one way: our cars have stopped listening to us and are now behaving according to the design decisions made by some faraway programmer.

Consider the story of T. Candice Smith:

The starter interrupt device allows lenders to remotely disable the ignition and to track the car’s location. These type of devices have been installed in about two million cars in the US. For me this story is symptomatic of the distance that technology can create between the person owning the technology and the person suffering its effects.

The way that code regulates is often very absolute. Lessig writes that our behaviour on the internet is mostly regulated by code. As the network moves into everything around us and more and more of our life is mediated by technology we didn’t design and don’t own, we will be confronted more often with technology creating boundaries that are, quite literally, inhumane. This is my main reason for working at digital rights organization Bits of Freedom. If we don’t change our ways we will increasingly be beholden to the limited imagination of the developers that are creating our world. Let me give you a couple of examples.

I’ll start with a very trivial example that does make the point quite clearly.

Hilton Paddington in London and Hilton Doubletree in Amsterdam

I have a friend who works for a giant multinational and lives in the East of the Netherlands. Like any good corporate bureaucrat he makes sure to collect as many loyalty points for his business trips as he possibly can. One day he had to be in London so he had a reservation for a few nights at the Hilton there. Then the weather became very stormy and he didn’t know whether his plane would be able to leave the Amsterdam airport. Just to make sure that he would have a place to sleep he also booked a room at the Hilton in Amsterdam. I believe he slept in Amsterdam that night and made it to London the next day. There he was surprised to learn that he had received no loyalty points for the night (even though his company paid for two nights). The developer who had made the system couldn’t imagine somebody spending a night in two different hotel rooms and had created an anti-fraud measure in the loyalty software blocking the awarding of points in this unusual situation.

This example is relatively harmless, but it does show how not being able to think of all the options can be less than helpful. Let me give you another example.

LinkedIn wants to give you as many opportunities as possible to connect with your ‘valuable’ professional contacts. So whenever somebody has been in a particular job for a set number of years, LinkedIn will tell you that that person has “a work anniversary” and is celebrating x number of years with their employer. You can then ‘like’ this fact or leave your congratulations in a comment.

A lot of people use LinkedIn to find jobs too. What do you enter in the ‘Employer’ field when you don’t have a job? Some people fill in that they are ‘Unemployed’. This is then how, just the other day, a colleague came to be asked to ‘like’ the fact that one of his contacts was celebrating that he had been unemployed for one year.

Reconstructed version of a LinkedIn profile page urging you to ‘like’ unemployment

In late 2014 Facebook’s ‘Year in Review’ took ‘algorithmic cruelty’ to a new level. The ‘Year in Review’ functionality allows Facebook users to quickly pick some memorable pictures from the past year and display them in a sharable virtual album. Facebook advertised this feature to its users by pushing a picture in their timeline urging the user to “See Your Year”.

Facebook’s 2014 ‘Year in Review’

For Cascading Style Sheets guru Eric Meyer this was incredibly painful. I’ll quote from his blog:

I didn’t go looking for grief this afternoon, but it found me anyway, and I have designers and programmers to thank for it. In this case, the designers and programmers are somewhere at Facebook. I know they’re probably pretty proud of the work that went into the “Year in Review” app they designed and developed [..]. Knowing what kind of year I’d had, though, I avoided making one of my own. I kept seeing them pop up in my feed, created by others, almost all of them with the default caption, “It’s been a great year! Thanks for being a part of it.” [..] Still, they were easy enough to pass over, and I did. Until today, when I got this in my feed, exhorting me to create one of my own. “Eric, here’s what your year looked like!”

Eric Meyer being urged to ‘see his year’

A picture of my daughter, who is dead. Who died this year. Yes, my year looked like that. True enough. My year looked like the now-absent face of my little girl. It was still unkind to remind me so forcefully.

Meyer wasn’t the only one who took issue with the algorithmic unkindness. Some quotes from Twitter: “So my (beloved!) ex-boyfriend’s apartment caught fire this year, which was very sad, but Facebook made it worth it.” and “I’m so glad that Facebook made my ‘Year in Review’ image a picture of my now dead dog. I totally wanted to sob uncontrollably this Xmas” or “Won’t be sharing my Facebook Year in Review, which ‘highlights’ a post on a friend’s death in May despite words like ‘killed’ and ‘sad day'”.

According to Meyer it would have been easy enough to withhold from picking pictures by algorithm, and to simply ask a user whether they wanted a ‘Year in Review’ or not. He considers it an “easily-solvable” problem: the app only needed to be designed with more worst-case scenarios in mind. I think his solutions are correct, but the problem actually lies somewhere else. The insensitive way that ‘Year in Review’ was implemented is a consequence of it being designed by people who live in a tech bubble which is disconnected from the rest of the world. For these types of problems to stop occurring, Silicon Valley will have to become radically more diverse.

Look at these graphs:

Diversity at major tech companies

They show the ethnicity and gender distribution at major technology companies. They tell a pretty clear story: the median person working for these companies is male and white. There is some data missing though. These people are also very young, the median age at Facebook is 28, at LinkedIn it is 29 and at Google it is 30. And they are relatively affluent of course.

Are we surprised that it might be hard for a young, privileged guy in his twenties working at one of the tech-campuses in sunny California to imagine that not everybody might have had a ‘great year’? That other people might have had to deal with loss? I recognize this in myself: as a 28 year old who had never had any setbacks in his whole life (and who thought he was smarter than everybody else), I certainly could have made the same mistake as the developers behind ‘Year in Review’.

After a female software engineer started calling for data on the gender gap (a perspective that could only come from an true engineer: you cannot solve a problem without having data), a lot of companies have started to become more transparent about their diversity problems. On its global diversity page Google writes: “We’re not where we want to be when it comes to diversity.” The page also lists their ‘Employee Resource Groups’. Like the ‘Gayglers’…

Gayglers at Chicago Pride

… here at Chicago Pride with, what I imagine to be, same sex Androids holding hands on their T-shirts. Or the ‘Greyglers’…

Google has to be lauded for their openness and for their effort. But when you dig a little deeper you learn that Google thinks there are two root causes for their diversity issues. First, there is a lack of non-white or non-asian and non-male coding talent. The senior VP of people operations, for example, said the following in an interview last year:

In recruiting African-American computer science PhDs, my team told me this year they did pretty well. They captured [..] 50 percent of all black PhDs in computer science. But they said there were four, and two stayed in academia. So of the available two, we hired one.

Second, they believe their staff members, like everybody else in the world, have an “unconscious bias” that affects the “perception of others, interactions with coworkers and clients, and the business overall.” To solve this they have created a company-wide dialogue to increase the awareness of this problem.

This type of thinking shows that diversity has not yet become a core part of Google’s business strategy. Unfortunately this is the case for most companies in the Valley. This will obviously need to change if we want technology that reflects all of humanity.

Are there other ways that we can reduce the distance between the people who create and own technologies and the people who use them? The distance that makes it easy for a lender to turn off a car at a distance without much thought?

A few years ago I visited the Hiroshima Peace Memorial Museum. After having looked at the melted household objects and having listened to the gruelling witness testimonies, I was in shock and completely puzzled. How could somebody give the executive order to drop an atomic bomb on a city, knowing it would kill tens of thousands of people and injure many more?

Hiroshima right after the atomic bomb had exploded

I am now sure that you can only sign the relevant papers if you are far enough removed from the future victims and if the inherent violence of such an act has been abstracted away from you.

During the Cold War a lot of thought was put into how to design the processes and systems around the nuclear capabilities. Satirical news site ‘The Onion’ cleverly plays with our notion of a big button living on a president’s desk somewhere. They headline their article with “Obama Makes It Through Another Day Of Resisting Urge To Launch All U.S. Nuclear Weapons At Once”.

Obama with the proverbial button on his desk

Of course there are no buttons. But how do you design a system that will prevent the potential moral hazard of a president who is a bit quick to judge? In the early eighties Harvard law professor Roger Fisher came up with a plan. You would have to require a code to start the nuclear machinery and then you would, and I cite:

Put that needed code number in a little capsule, and then implant that capsule right next to the heart of a volunteer. The volunteer would carry with him a big, heavy butcher knife as he accompanied the President. If ever the President wanted to fire nuclear weapons, the only way he could do so would be for him first, with his own hands, to kill one human being. The President says, “George, I’m sorry but tens of millions must die.” He has to look at someone and realize what death is—what an innocent death is. Blood on the White House carpet. It’s reality brought home.

This is a very useful metaphor to help us find solutions to our current technological straits. I like it for two reasons.

The first is that it gives the president a different form of ‘skin in the game’, especially if we imagine that the volunteer would do his best to befriend the president. Right now, making and delivering software comes with zero liability. That is not a smart way to design things in a complex world. ‘Skin in the game’ is one of the core concepts in Nassim Taleb’s most recent book: ‘Antifragile’. In it he refers to the 3700 year old Hammurabi code.

The Hammurabi code

This Babylonian set of laws is probably best known for its ‘eye for an eye, tooth for a tooth’ type of rules. Taleb focuses on law number 229 which is about building a house:

If a builder build a house for some one, and does not construct it properly, and the house which he built fall in and kill its owner, then that builder shall be put to death.

In his Blackhat keynote, Dan Geer has translated this rule into simple liability guidelines for software. Clause 1 is as follows: “If you deliver your software with complete and buildable source code and a license that allows disabling any functionality or code the licensee decides, your liability is limited to a refund.” And then clause 2: “In any other case, you are liable for whatever damage your software causes when it is used normally.” According to Geer:

If you do not want to accept the information sharing in Clause 1, you fall under Clause 2, and must live with normal product liability, just like manufacturers of cars, blenders, chain-saws and hot coffee.

Geer’s idea would work on many levels. It would incentivize the industry to use open and proven standards and to not constantly reinvent the wheel. It would increase the level of transparency around the decisions that computers make and give us insight into the algorithms that regulate so much of our life. And most importantly it would likely lead to most software having a manual override in case a particular situation does not fit the generic mold. It would thus help us avoid situations like the following:

The second reason why I like Roger Fisher’s idea of the nuclear code transplanted in the heart, is because it enlarges the systems boundary of the problem that is being solved. Rather than focusing on how to make sure that nobody can accidentally start a nuclear war without following the correct procedure, Fisher has framed the problem as how to bring humanity into an inhuman decision.

It is this type of intentional design that I would like to see more often. At Yahoo they have used it to make maps.

Routes in London from Euston Square to the Tate Modern

These routes from Euston Square to the Tate Modern were designed by crowdsourcing the places in London that are quiet, that are beautiful or that make people happy. Of course it is great to have an alternative to the relentless logic of efficiency or commercial interest, but what I truly love about these routes is the way that they give me an explicit choice about what I think is important when I travel through the world.

Credits for the images and links to the sources are available here.