Can Design Save Us From Content Moderation?

- 06 december 2017

- Public Arts

Our communication platforms are polluted with racism, incitement to hate, terrorist propaganda and Twitter-bot armies. This essay argues some of that is due to how our platforms are designed. It explores content moderation and counter speech as possible “solutions”, and concludes both fall short. Alternatively, the essay suggests smart design could help mitigate some of our communication platforms’ more harmful effects. How would our platforms work if they were designed for engagement rather than attention?

Afraid of friction

In the 1930s industrial designer Egmont Arens introduced the concept of “humaneering”. Humaneering is the idea that you design products in such a way as to minimize friction between the product and the user.

We’ve heard a lot of similar ideas about design since then. In the fifties Henry Dreyfuss argued that design failed if the point of contact between the thing and the person became a point of friction. Talking about ubiquitous computing, Mark Weiser introduced the idea of “calm” technology in the nineties, where the computer is an extension of our unconscious. One of the best-designed services I use, WeTransfer, challenges itself to work without breaking someone’s “flow”.

The products and technologies we use have back-ends that are far more elaborate and more complex than their interfaces allow us to believe. In addition, the way in which we’ve traditionally thought about and designed our interactions with products, you could argue has only further alienated us from those products’ technological, political and social implications.

The way in which we’ve traditionally thought about and designed our interactions with products has only further alienated us from those products’ technological, political and social implications.

Things vs. devices

In 1984 Albert Borgmann introduced the distinction between a “thing” and a “device”. A thing is something you experience on many different levels, he said, whereas your interaction with a device is extremely limited, purposefully reduced. The most well-known example Borgmann gives of this is the wood burning stove on the one hand, and central heating on the other.

Left: Google Nest. Right: wood stove.

A wood burner’s main function is to warm the house. But it also requires skills like chopping wood and keeping the fire burning, skills that are usually distributed amongst family members. It's the place in the house where the family gathers around, and it changes throughout the day, blazing in the morning, and simmering at night. It also tells you something about the seasons, as its role changes throughout the year.

Central heating performs the main function, the warming of the house, a lot more efficiently, but it has none of the other functions. There is no real engagement between the person and the device. Borgmann really regrets this because he says this engagement is necessary in order to understand the world and respond to it in a meaningful way.

Engagement vs. attention

Considering Borgmann, you could argue that a degree of friction might actually be a good thing, as it allows for real engagement, a conscious participation. A slightly weird example of this, perhaps, is the internet browser pop-up that asks you to sign up for a newsletter. The only reason it’s there is so you can close it. Tests have shown that as soon as you perform any type of action on a webpage, engagement goes up and you’re more prone to take action in the desired way.

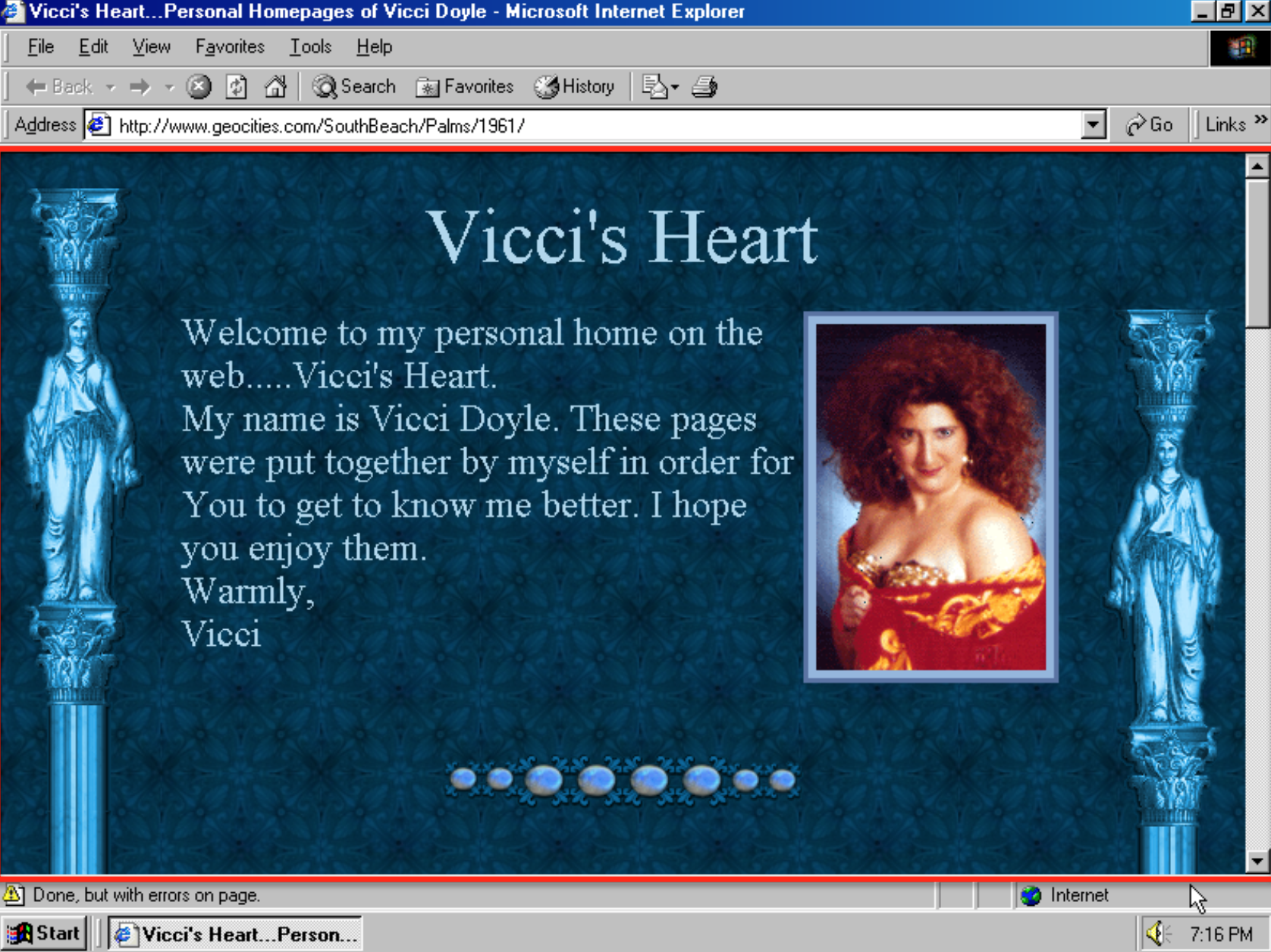

Another example: compare the average user’s webspace now with the webspace a user had 25 years ago.

In the nineties, having your personal homepage required imagination and skills. What am I going to fill my page with? What will it look like? What will the URL be? How do I get my animated gifs to spiral through the screen? At the very least you needed a basic understanding of html. These days this is what your personal space on the internet looks like.

Your Facebook profile has a little box where you’re allowed to type, and there are a few designated places for images, in a specified file type and size. We can comment or better yet, choose from a set of predefined emotions. Facebook has definitely made it easier for us to create and maintain this space we call our own, but it doesn’t require us to have any skills or use our imagination. Yes, Facebook has managed to harvest the attention of an unbelievable number of users, but how many of those feel really engaged with their account? More and more often, people speak of it as a necessary evil.

Our communication platforms are failing us

This is important because something in the way our communication platforms are designed has resulted in a lively trade in likes and followers. It leads to people spending days and days arguing with Twitter-bots. It also contributes to Sylvana Simons"20 Are Convicted for Sexist and Racist Abuse of Dutch Politician" being abused and threatened relentlessly. It makes it possible for Zoë Quinn’s"Zoë Quinn: after Gamergate, don't 'cede the internet to whoever screams the loudest'" on-again-off-again ex-boyfriend to vent his anger over being dumped and mobilize a crowd of angry white dudes to ruin her life. Something in the way our platforms are designed allows Steve Bannon to round up that same mob of angry dudes"What Gamergate should have taught us about the 'alt-right'", but this time direct their anger at supporting Donald Trump’s presidential campaign. The design of our communication platforms isn’t the cause of these issues, but it is reinforcing and intensifying existing imbalances. It turns us into spectators, and abuse into a spectacle.Flavia Dzodan refers to this as a "theatre of cruelty”. It’s resulting in hate speech.

Fighting hate speech

So back to this. The debate on how to deal with harmful content generally focuses on two arguments, or solutions if you will. The first is content moderation, the second is counter speech. Let’s take a look at both solutions.

Content moderation

The relationship between governments and the big networking platforms is quite complex. Your opinion on how these platforms should operate and if they should be regulated, depends among other things on if you consider a platform like Facebook or YouTube to be the open internet, or if you consider them closed, privately owned spaces. The truth, of course, lies somewhere in the middle. These are private spaces that are used as public spaces and they are becoming larger and more powerful by the minute.

Faced by this situation, and not wanting to be seen as to be doing nothing to counter harmful content, governments are forcing platforms to do anything – and thereby skillfully avoid taking responsibility themselves. The outsourcing of public responsibility to private parties – and being very vague about what that responsibility entails – is bad for approximately a gazillion reasons. Here are four of the most important ones.

To avoid being seen as to be doing nothing, governments are forcing platforms to do anything – and thereby skillfully avoid taking responsibility themselves.

1. Platforms will over-censor

First, it encourages platforms to rather be (legally and politically) safe than sorry when it comes to the content users upload. That means they will expand the scope of their terms of service to be able to delete any content or any account for any reason. Without doubt this will occasionally help platforms take content down that shouldn’t be online. But it has already also lead to a lot of content being removed that had every right to be online. One recent example of such a screw-up is YouTube accidentally removing thousands of videos"YouTube Removes Videos Showing Atrocities in Syria" documenting war crimes in Syria.

2. Multinationals will decide what's right and wrong

Second, having your freedom of speech regulated by US multinationals will mean, if not now then in the future, that nothing you say will be allowed to be heard unless it falls within the boundaries of US morality, or unless it suits the business interests of US companies. Another possible outcome: if Facebook chooses to comply with countries’ individual demands – and why wouldn’t it? – , the result could also be that only those pieces of information will be allowed that are acceptable to every one of those countries.

We're already seeing examples of how this could play out. Earlier this year UK-vlogger Rowan Ellis started noticing how LGBTQ+-related content wasn’t showing up in YouTube’s Restricted Mode. Leading Ellis to remark that "there is a bias somewhere within that process equating LGBTQ+ with ‘not family friendly.’""YouTube's Restricted Mode Is Hiding Some LGBT Content [Update]"

These aren’t accidents. They’re the result of how these systems are designed and by whom they’re designed. It’s also the result of increasing pressure on platforms to do something, however flawed that something might be.

These aren’t accidents. They’re the result of how these systems are designed and by whom, and the result of increasing pressure on platforms to do something, however flawed that something might be.

3. Privatized law enforcement will replace actual law enforcement

Third, putting companies in charge of taking down content is a form of privatized law enforcement that involves zero enforcement of the actual law. In doing so, you bypass the legal system and people who should actually be charged with an offense and appear before the court, never do. People who wrongfully have content removed, have very few ways to object.

4. We'll become more vulnerable

Finally, this normalizes a situation where companies can regulate us in ways governments legally cannot, as companies aren’t bound (or hindered) by international law or national constitutions.

So put gently, this solution is not proving to be, and most probably won’t be in the future, an unabashed success. Lets take a look at the other proposal, counter speech.

Counter speech

This argument boils down to the belief that the solution to hate speech is free speech. We cannot have a functioning democracy without free speech, but this argument completely neglects to acknowledge the underlying societal power imbalances, the systemic sexism and racism that informs our media, our software and our ideas.

An example. In 2015 Breitbart published an article in which it criticized Anita Sarkeesian and Zoë Quinn for speaking at a United Nations event about cyber violence against women. Breitbart wrote:

“feminists are trying to redefine violence and harassment to include disobliging tweets and criticisms of their work. […] In other words: someone said “’you suck’” to Anita Sarkeesian and now we have to censor the internet. Who could have predicted such a thing? It’s worth noting, by the way, that if Sarkeesian’s definition is correct, Donald Trump is the world’s greatest victim of ‘cyber-violence.’ Someone should let him know.”

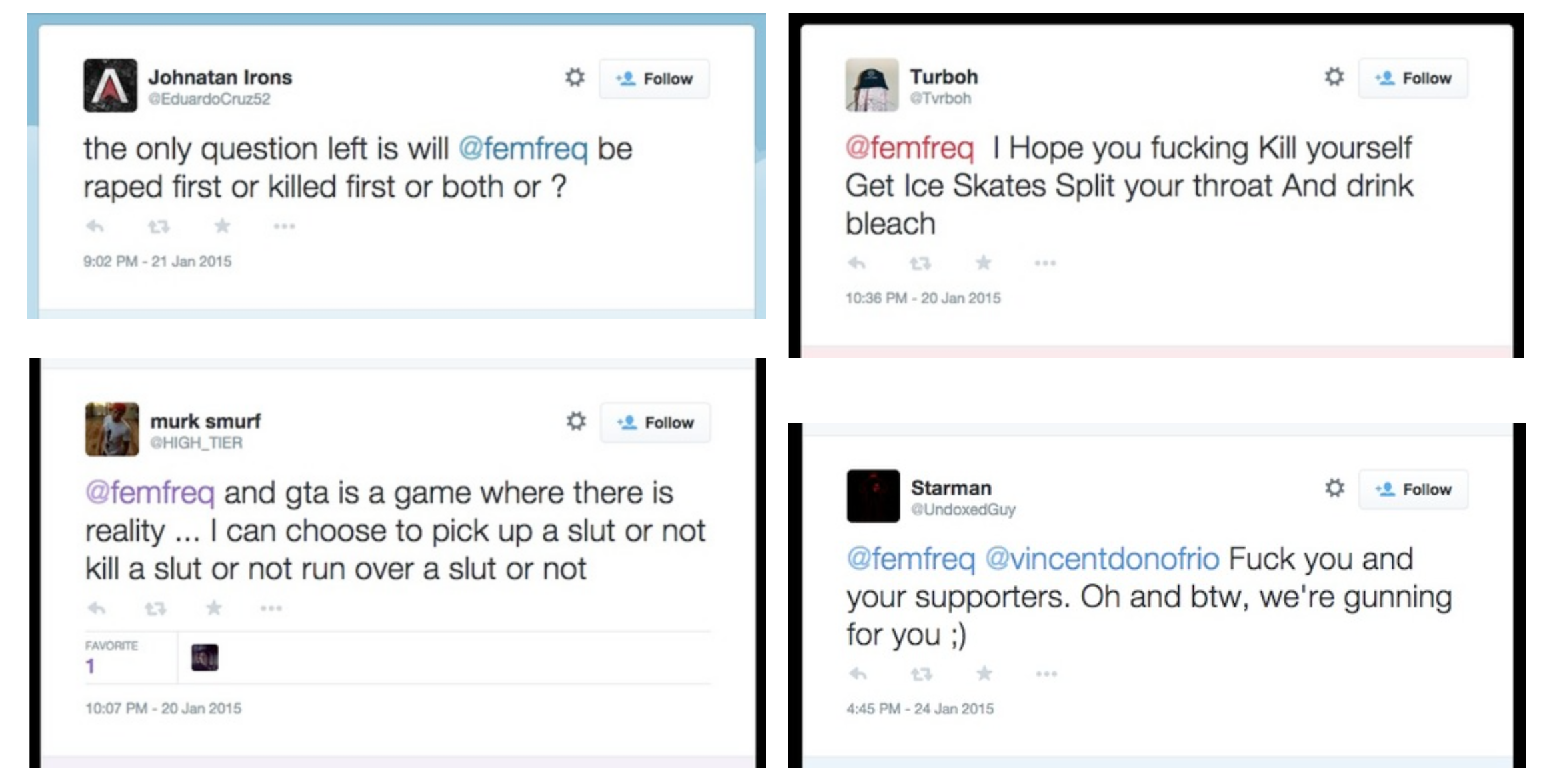

Two interesting things are happening here. First, the writer argues that what is happening to these women shouldn't be considered violence or harassment. So lets take a look at the types of tweets Breitbart calls “disobliging”. Here are a few of one week’s worth of messages (157 in total) Sarkeesian received on Twitter.

Anita Sarkeesian is a media critic and blogger who became famous in 2012 for her YouTube series "Tropes vs. Women in Video Games", in which she examines tropes in the depiction of female video game characters. For this, she received rape and death threats, her accounts were hacked, her personal information distributed online, her entry on Wikipedia was vandalized with racial slurs and sexual images, she received drawings of herself being raped, venues at which she was supposed to speak received bomb threats, and one particularly creative dude created a video game called "Beat Up Anita Sarkeesian". Can this really be reduced to "someone saying 'you suck'"? No.

And that's in part because of the second thing that stands out in this quote, namely the writer equating cyber violence directed at Anita Sarkeesian to cyber violence directed at Donald Trump. I’m sure Donald Trump receives some nasty, or, disobliging, tweets, and we’ve seen proof of Twitter keeping him up at night. However, Donald Trump is a powerful, rich, white male. He's in a position to simply shrug off harassment, or make it go away. When directed at someone like Sarkeesian, who isn't a powerful, rich, white male, cyber violence can actually bring life to a halt.

Not all voices are equal

As Bruce Schneier"Power and the Internet" neatly put it: "[Technology] magnifies power in both directions. When the powerless found the Internet, suddenly they had power. But [...] eventually the powerful behemoths woke up to the potential — and they have more power to magnify." We have to acknowledge that as long as there are structural imbalances in society, not all voices are equal. And until they are, counter speech is never going to be a solution for hate speech. So this solution, too, will continue to fall short.

Why design matters

Which brings us back to design. Just like the internet isn’t responsible for hate speech, we can’t and shouldn’t look to design to solve it. But design can help mitigate some of our communication platforms’ more harmful effects.

Design influences behavior

Two things about design. First, design influences behavior. We know this. You can feel the influence of design in really every product or device you use. Who doesn't feel a surge of excitement when encountering one of these?

Design is political

Second, design is always political. Take Paris. In 1853, Napoleon commissioned Baron Haussmann to build him a new city. What Haussmann created was the now iconic city plan with its wide boulevards and beautiful lines of sight. He had a state of the art sewage system built that drastically improved life. But he also knocked down 12,000 buildings, forcing many of the poorest residents out of their homes. The new design for the city served some more than others.

Champs Élysées, Paris door Ian Abbott

Closer to home, in the Netherlands, urban planning and housing design from the fifties reflect post-war ideals of total transparency.

J. Versnel, Model home Slotermeer. Taken from: Roel Griffioen, "Het glazen huis: Privacy en openbaarheid in de vroegnaoorlogse stad", Tijdschrift Kunstlicht.

I was reminded of another example when Rihanna launched her line of beauty products, Fenty Beauty. It really rattled the major cosmetic companies because it drew attention to the fact that, generally speaking, they make products with a very limited set of people in mind.

Then, of course, there’s our favorite new language: emoji. The Unicode Consortium is a group of people who make sure that when you press down on a letter on your keyboard, every computer everywhere in the world knows what character to show on the screen. They do this for emoji too. In addition, they decide which emoji get added to the library and which don’t. Looking at the emoji available, graphic designer Mantas Rimkus found that none of them helped him express his engagement with current affairs. He set up the project "demoji"Demoji is an open platform where you can submit and use alternative emojis., which aims to represent, in emoji, the complexity of our world. Artist and researcher Lilian Stolk asks children to design the emoji they would like to add to the emoji alphabet and subsequently makes them available through sticker appsMore Moji is available in the App Store. One of the results:

Hijab emoji, result of a workshop at a high school in Amsterdam. tearsofjoy.nl.

Clever design could save our platforms

When it comes to platforms, there is a very recent example that shows the importance of design. In 2015, Coraline Ada Ehmke, a coder, writer and activist, was approached by GitHub to join their Community & Safety team. This team was tasked with “making GitHub more safe for marginalized people and creating features for project owners to better manage their communities.”The examples referred to were taken from Ehmke's article "Antisocial Coding: My Year at Github". Emhke accepted the offer.

Ehmke had experienced harassment on GitHub herself. A couple of years ago someone created a dozen repositories, and gave them all racist names. This person then added Ehmke as a contributor to those repositories, so that when you viewed her user page, it would be strewn with racial slurs – the names of the repositories.

A few months after Ehmke started, she finished a feature called “repository invitations”: project invites. This basically means that you can’t add another user to the project you’re working on without their consent. The harassment she had suffered wouldn’t be able to happen to anyone again. Instead of filtering out the bullshit, or going through an annoying and probably ineffective process of having bullshit removed, what Ehmke did was basically give the user control over her own space, and create a situation in which the bullshit never gets the chance to materialize.

Instead of filtering out the bullshit, or going through an annoying and probably ineffective process of having bullshit removed, what Ehmke did was basically give the user control over her own space, and create a situation in which the bullshit never gets the chance to materialize.

Ehmke also added the “first-time contributor” feature, a little badge that a project lead would see next to the name of a new contributor to that project. The idea was that it would encourage the project lead to be extra supportive of this person. The final feature she worked on was an easy way to add a code of conduct to your project. Pretty cool.

We need to do better

In about 9 weeks, Zoë Quinn received 10,400 tweets in relation to GamerGate. That’s 7 tweets per hour, 24 hours a day for 9 weeks. They weren’t tweets of support. Although women are more likely to encounter harassment online, misogyny isn’t the only problem. Our communication platforms are polluted with racism, incitement to hate, terrorist propaganda and Twitter-bot armies. We need to do something about it.

Taking “censorship”, renaming it “content moderation”, and subsequently putting a few billion-dollar companies in charge isn’t a great idea if we envision a future where we still enjoy a degree of freedom.

The approaches we’re taking now, simply aren’t good enough. Taking “censorship”, renaming it “content moderation”, and subsequently putting a few billion-dollar companies in charge isn’t a great idea if we envision a future where we still enjoy a degree of freedom. Holding on to the naive idea of the internet offering equal opportunity to all voices isn’t working either. What we need to do is keep the internet open, safeguard our freedom of speech and protect users. Ehmke has shown that smart design can help.

What you can do

Next time you set foot on the internet: be aware of the ways in which platforms influence your behavior. Question everything. Ask why. Ask for whom a service or feature works and for whom it doesn't. Ask what kind of user the designer of a product wants you to be. What are the interfaces hiding?

If you’re an activist: don’t let the success of your work depend on these platforms. Don’t allow Facebook, Google and Twitter to become a gatekeeper between you and the members of your community, and don’t consent to your freedom of speech becoming a byline in a 10,000-word terms of service "agreement". Be as critical of the technologyYou can visit toolbox.bof.nl to explore some alternatives to commonly used tools, or visit a Privacy Café if you need help. you use to further your cause as you are of the people, lobbyists, institutions, companies and governments you’re fighting. The technology you use matters.

Don’t allow Facebook, Google and Twitter to become a gatekeeper between you and the members of your community, and don’t consent to your freedom of speech becoming a byline in a 10,000-word terms of service.

Finally, if you’re a designer: be aware of how your cultural and political bias shapes your work. Build products that foster engagement rather than harvest attention. Don’t be afraid of friction. And if you have ideas about how design can help save the internet, please get in touch.